From article of the same title…

Smart people have a problem, especially (although not only) when you put them in large groups. That problem is an ability to convincingly rationalize nearly anything.

Go read it.

Via Daring Fireball

From article of the same title…

Smart people have a problem, especially (although not only) when you put them in large groups. That problem is an ability to convincingly rationalize nearly anything.

Go read it.

Via Daring Fireball

Ben Thompson excellent again. The price of undifferentiated software converges to zero and therefore three main business models he sees are:

Another great post by Ben Thompson over at stratēchery:

Steve Ballmer restructured Microsoft yesterday as a functional organization. The immensity of this change can not be understated, nor can the risks. Ultimately, I believe the reorganization will paralyze the company and hasten its decline.

Read it.

Buckle up, I’m going all meta on you with this one. But don’t worry, there’s a drawing in there.

I felt, I have a grasp on the concept of how Intelligence differs from Knowledge and Wisdom. When I tried to put it into words, I probably failed. But just now, a picture came to my mind. I suspect my long hours with an unnamed turn based strategy have something to do with it, nonetheless, this metaphor came to me:

Intelligence is our army of soldiers and weaponry we use to conquer Knowledge. Knowledge is the piece of land we control. The dots on an infinite map. With enough Knowledge in our possession, Wisdom emerges. Wisdom is the ability to see connections among the dots of Knowledge and (besides other benefits) shows us new land to conquer.

You see, this is not war for a finite resource that we steal from someone else. Knowledge is boundless as is the Wisdom it produces. And our growth of Knowledge and Wisdom will have positive impact on our Intelligence in the next turn.

It is a self-propelling mechanism. Not all self-propelling mechanisms are necessary good, but this one is a product of Evolution. We may be the branch that fails but who says She plays with just our planet. The Lady will get it right somewhere.

I wanted to share five paragraphs of customer support conversation I’ve had with the rest of the team (two other guys). As I’ve IMed with one of the guys few minutes ago, I automatically went for the IM window. But first thing that stopped me was I remembered that sometimes the IM does not send longer messages. So I am thinking: “OK, there has to be some better way.”

Then it clicked: “Oh, wait, this is the sort of thing we have Basecamp account for!” And that led me to realizing one obvious disadvantage of abstract software.

Continue reading

Another piece the my mosaic of ideas. This outtake from book “Anything You Want” by Derek Sivers resonates with my own thougts.

I am trying to propagate one obvious to me: Web apps are ideally positioned to be very successful in getting done a variety of jobs for businesses and make a lot of money along the way. For now, that seems uninteresting to others.

Thanks to Jiří Sekera for reminding me about this one.

I was fortunate enough to get to talk at Future of Web Apps + Future of Web Design double conference in Prague. First, I would like to thank to Future Insights (previously Carsonified) and personally to Cat Clark for the trust in me to give me the speakers wild card.

Bellow are my slides and complete text of my 30 minutes speech. I spoke from memory so I have probably digressed on a few places. Also, please, forgive any typos or grammatical errors I’m basically posting my notes and I had no time to do thorough proofreading.

I love Ive’s speech on the Steve’s life celebration event they had at Apple campus in October 2011. Especially the beginning of it.

Steve used to say to me — and he used to say this a lot — “Hey Jony, here’s a dopey idea.”

And sometimes they were. Really dopey. Sometimes they were truly dreadful. But sometimes they took the air from the room and they left us both completely silent. Bold, crazy, magnificent ideas. Or quiet simple ones, which in their subtlety, their detail, they were utterly profound.

And just as Steve loved ideas, and loved making stuff, he treated the process of creativity with a rare and a wonderful reverence. You see, I think he better than anyone understood that while ideas ultimately can be so powerful, they begin as fragile, barely formed thoughts, so easily missed, so easily compromised, so easily just squished.

This may sound a bit too abstract to you, let me provide you with more tangible example.

Famously, when Albert Einstein was struggling to extend his special theory of relativity to gravity, he at one time imagined a man falling from the roof who is not feeling his own weight.

So what? Everyone knows that one feels weightless when falling. Well, that is why we call Albert a genius, because he was able to glimpse something important, something deep, in this piece of everyday obviousness.

The thing is, when a man falling does not feel gravity, it is really the acceleration that provides the equivalence here. And he then kept working from this perspective. He worked on it hard. Like I mean really, really hard. For eight freaking years he worked on it. He worked on it so hard that at the end he was physically exhausted and ill.

It’s not that I’m so smart, it’s just that I stay with problems longer.

— Albert Einstein

The result? Total change of our view of the universe. There is actually no such thing as gravity. It is all warping of space and time.

Be careful with your simple ideas, be protective of them, work on them. Work on them for much longer than others do. Stay with them beyond the easy first conclusion you might have made. You will probably not change our view of the whole universe, but you may change the world and that’s not too shabby either.

The trouble with this approach is that you have to be able to pick the right ideas or be able to recognize when you are on the wrong path with one.

Which brings me back to Steve when he answered the question “How do you know what’s the right direction?” in The Lost interview (time 01:06:20), he said (after a pause to think):

You know, ultimately it comes down to taste. It comes down to trying to expose yourself to the best things that humans have done and then try to bring those things into what you’re doing. I mean, Picasso had a saying, he said: Good artists copy, great artists steal.

And that’s a different topic.

James Hamilton wrote and article about using ARM processor in servers. He describes how ARM architecture offers better price/performance ratio and consumes less power.

It’s very nice example of disruption. You see, ARM started in small niche of processors that were constrained by small power consumption. They build up knowledge advantage in this field over the years.

And as mobile computing in form of smartphones and tablets is taking over the world, ARM is no more a niche player, they are providing architecture for billions of processors.

And now, ARM is going up market. Interestingly enough it’s beginning to take the very high-end of the processor market – servers.

How long before it is going to strike the real Intel fortress of desktop processors? Well, maybe not that long.

Another interesting aspect is that ARM is licensing the architecture so that others may be producing the chips or even doing their own design of them, as Apple does for their iPhones and iPads.

This will look like an off-topic, dear front-end designing/coding friends, but trust me, I will tie it back to design.

I bet you’ve heard the song “Somebody That I Used To Know”, originally by Gotye. Catchy tune, a bit more indie/hippie than I like, but I would give them the lyrics, those are timeless as is the occasional need to do open surgery on our own feelings, because, you know, nobody else understands us.

Continue reading

You may’ve heard this phrase also in a more humble (and original) form “let hundred flowers bloom”. And I don’t know about you, but I usually come across it in business context, where it means for the business to try many different ways at the same time. For example try many different products for different segments and see what catches on.

I’ve always had a problem with this phrase.

I am convinced, that for anything to succeed in the web business today, it needs to be polished in what it does. As John Gruber puts it:

Figure out the absolute least you need to do to implement the idea, do just that, and then polish the hell out of the experience.

Problem is, you can’t polish the experience of thousand or even hundred flowers at the same time, no matter how big company you are. See Google.

Polishing is usually thought about as the sort of “last 20 %” of the project, maybe less. Applying the 80/20 rule here, I would suggest, that it may be that 20 % making the 80 % of results. So we would be wise to put a lot of effort to it, which is exactly what you can’t do with “thousand of flowers”.

And anyway, why should you listen to something a known mass murderer proposed?

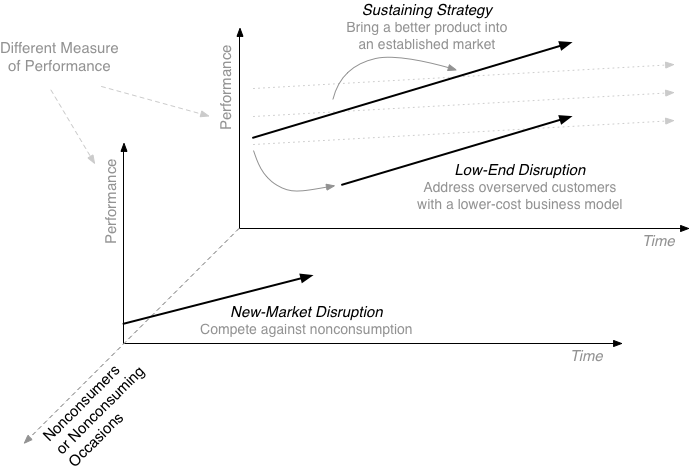

This article should give you quick overview of Clayton Christensen’s ground breaking book Innovator’s Solution and then I will try to apply his point onto the web application industry.

Citing from the book:

Disruptive innovations … don’t attempt to bring better products to established customers in existing markets. Rather, they disrupt and redefine that trajectory by introducing products and services that are not as good as currently available products. But disruptive technologies offer other benefits–typically, they are simpler, more convenient, and less expensive products that appeal to new or less-demanding customers.

…Disruption has a paralyzing effect on industry leaders. With resource allocation processes designed and perfected to support sustaining innovations, they are constitutionally unable to respond. They are always motivated to go up-market, and almost never motivated to defend the new or low-end markets that the disruptors find attractive. We call this phenomenon asymmetric motivation.

You should definitely take a look at Innovator’s Solution, it’s great book and has more interesting concepts in it like the cycle between integration and modularization in an industry or the job to be done approach to business strategy.

I will introduce you to two key concepts of the book – low-end and new-market disruption.

Low-end disruption, as described by Clayton, starts at the low end of the existing market. But the lower price is a result of new business processes not just lower margin on the same process employed by established market players.

One example of this strategy may be Walmart and other discount stores that offer their customers cheaper goods but compensate the lower margin via much larger amount of sold inventory and quicker turnover of inventory. So they may have lower margins than traditional stores, but they turn it over three times faster thereby more than making up for it. And then there are all the elaborate logistic things Walmart does, going so far as to direct their suppliers businesses in some ways.

Another example may be personal computers, which were low-end disruption relative to the mainframes. Or now the tablets (iPad) which are disrupting the PC.

The key is that there are overserved customers in the existing markets that are willing to let go of some features of the product in exchange for lower price, simplicity, convenience etc.

Established players are motivated not to fight with the new entrant as he is attacking the least earning portion of their business.

The target group of new-market disruption are non-consumers or non-consuming occasions. The disruptor is somehow able to transform existing product and get the new consumers.

Example might be the first transistor radios that were too low quality for the existing customers but were great for teenagers who were able to listen to what they wanted for the first time. And they allow everyone to listen on the go or outside home, which was impossible before.

The best thing from the point of view of the disruptor is that established market players ignore you for a long time because you are not eating their lunch. At least not for some time. And then it is too late for them.

OK, this was really quick and simplifying intro, again, read the book and/or watch the videos linked bellow.

In part 2, I will look at the implication of all this on the market of web applications.

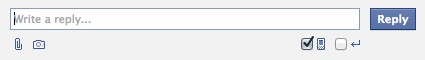

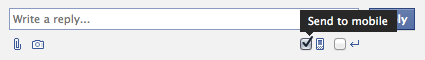

I tweeted that I don’t think the checkboxes Facebook uses in its Messages section (below), are the best way to go about the problem. I suggested using the icon itself as the toggle for this job.

I come up with a rough draft. Obviously, it would need more thinking and knowing more about Facebook’s style guide, but you get the concept.

There were some interesting comments…

@soundake @jankorbel Checkboxes stand for *settings*, buttons stand for *actions*. Trying to merge them together is not good idea. #howgh :)

— Tomáš Hellebrand (@hellishcz) June 11, 2012

@jankorbel @hellishcz @soundake Checkboxes are just fine. No need to invent proprietary round checkboxes.

— Michal Illich (@michalillich) June 11, 2012

@jankorbel Don’t be clever, be obvious – zvlášť na webu pro miliardu BFU…

— Tomáš Kafka (@keff85) June 11, 2012

So here is my line of thinking.

Facebook wants its users to be able to know that they can:

And at the same time allow them to do it.

Both the jobs are complicated enough, we can safely presume, it is impossible to design something that enough users will get the first time they see it. That’s precisely why Facebook has a tooltip in place when you hover the icon or the checkbox. That’s something I would keep.

Now, for the “allow the user to tell us, he wants to activate the function” problem.

First, what the user has to know to be able to use current solution?

Quite low bets, granted, none the less, you can see there is some abstraction, mainly for the novice user you are trying to protect with “easy checkboxes”.

What the user needs to know to be able to use my proposed solution?

Back to the job we want to solve.

I defined the job to be done here as letting the user know she can do something here, and giving her the tool to do it.

All in all, I think I removed some abstraction from the thought process our imaginary user has to go through: How the checkbox works.

I’m aware, that my solution introduces the problem that the user has to be able to distinguish between the two states (on / off), but I firmly believe that’s less abstract than the checkbox concept. It’s much closer to the working of physical button in the real world.

Looking forward to your comments.

Jiri Jerabek tweeted his take on the toggle.

I would not show the label “Send to mobile” and stick with the current tooltip solution and the “Enter mode” toggle should look the same as the “mobile” one, but otherwise this is movement along the way I think it should be done.

By now you’ve probably seen the promotional video of the Leap, which created quite a stir last week all over internets. But just in case, here it is.

It definitely is cool. I just have a problem with the Leap being positioned by the producer in a “We are changing the world” and “Say goodbye to your mouse and keyboard” way.

I would like to see better interface for our desktop and laptops as much as the next guy but I rather doubt that it would be the Leap. Here is why.

Whether we like it or not, the desktop + windows + files metaphor currently used in OS X, Windows and other operating systems is built to be used with keyboard and mouse. It does not matter how good the Leap is, it will not be better for the current operating systems.

We see it on the touch devices like iPhone or iPad that for the new interface method to be better for some tasks, it required completely different OS.

So, if you saw the video above and thought “Wow, this is how I want to control my computer”, forget it, not going to happen. Not the least because controlling something with virtual touch is one abstraction layer more than using the touch we already have coming in full swing.

OK, so what if there are going to be applications developed for the Leap that run on the classical OS’s? That is definitely possible, but as it requires user to invest $70 on the top of the app price, we could predict, it will be interesting proposal for very limited number of apps.

Yes, I know, producer of the Leap says they are in the talks of integrating the technology right into notebooks but even if we give this the benefit of doubt, we could be sure it will be on very small number of the total market for some time. Who would develop his application for such a constrained niche? Chicken and egg, anyone?

I will tell you who maight. For example someone who wants to wow his target audience. Showrooms of car dealers, jewelleries, booths on trade shows and such.

Or highly custom applications for many areas such as 3D modeling and there are surely others.

The Leap could be useful, but it definitely will not be revolutionary.